End to End Memory Networks Explained

Two grand challenges in artificial intelligence research have been to build models that can make. Understand Gated End-to-End Memory Networks Deep Learning Tutorial.

What Is The Feynman Technique And Why It Matters In Business Fourweekmba How To Memorize Things How To Study Physics Teaching Skills

The input and output memory cells denoted by m i and c i are obtained by.

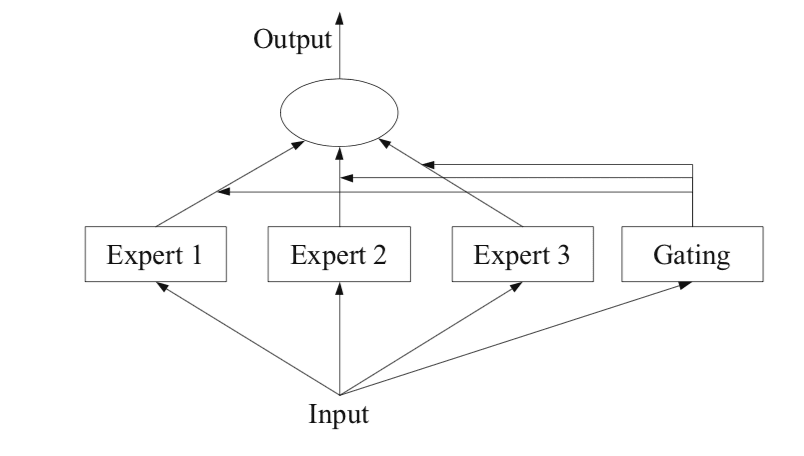

. 21 End-to-End Memory Networks The MemN2N architecture introduced by Sukhbaatar et al. Main Motivation Answer questions about a body of text. The architecture is a form of Memory Network Weston et.

The authors evaluate the model on QA and Language Modeling Tasks. A SoftmaxWuK1 SoftmaxWoK uK. End-To-End Memory Networks 1 Introduction.

Neural Network with a recurrent attention model over a large external memory. End-to-End Recommender System with Gradient - Part 1. End-To-End Memory Networks MemN2N Soft attention version of MemNN Flexible read-only memory End-to-end training Only needs final output for training Simple back-propagation Multiple memory lookups hops Can consider multiple memory before deciding output More reasoning power.

2015 is the first version of MemNN applicable to realistic trainable end-to-end scenarios which requires low supervision during training. Posing a Business Problem. We introduce a neural network with a recurrent attention model over a possibly large external memory.

We explore two types of weight tying within the model. The original torch code from Facebook can be found here. A Memory Network provides a memory component that can be read from and written to with the inference capabilities of a neural network model.

In the case of QA the network inputs are a list of sentences a query and during training an answer. The architecture is a. End-to-end Memory Network MemN2N It is based on Memory Networks by Weston Chopra Bordes ICLR 2015 Hard attention requires explicit supervision of attention during training Only feasible for simple tasks Severely limits application of the model MemN2N is.

End-To-End Memory Networks. Continous form of Memory-Network but with end-to-end training so can be applied to more domains. Pasted in Figure 2 is just a single layer version of the proposed model.

End to End Memory networks introduced in this paper by Sukhbaatar et al proposes. 2015 consists of two main components. We also constrain a the answer prediction matrix to be the same as.

The output embedding for one layer is the input embedding for the one above ie. End-To-End Memory Networks Introduction. This code requires TensorflowThere is a set of sample Penn Tree Bank PTB corpus in data directory which is a popular benchmark for.

The authors propose a recurrent memory-based model that can reason over multiple hops and be trained end to end with standard gradient descent. This paper proposes end-to-end memory networks to store con- textual knowledge which can be exploited dynamically during testing for manipulating knowledge carryover in order to model long-term knowledge for multi-turn understanding. Supporting memories are in turn comprised of a set of input and output memory representations with memory cells.

Problems must Know Before Building Model based on Memory Networks Memory Networks Tutorial. We introduce a neural network with a recurrent attention model over a possibly large external memory. End-to-end Memory Networks Sukhbaatar et al.

Supporting memories and final an-swer prediction. The architecture is a form of Memory Network Weston et al 2015 but unlike the model in that work it is trained end-to-end and hence requires significantly less supervision during training making it more generally applicable in realistic settings. Tensorflow implementation of End-To-End Memory Networks for language modeling see Section 5.

End-To-End Memory Networks in Tensorflow. Link to the paper. End to end memory network MemN2N The description as well as the diagrams on the end to end memory networks MemN2N are based on End-To-End Memory Networks Sainbayar Sukhbaatar etc.

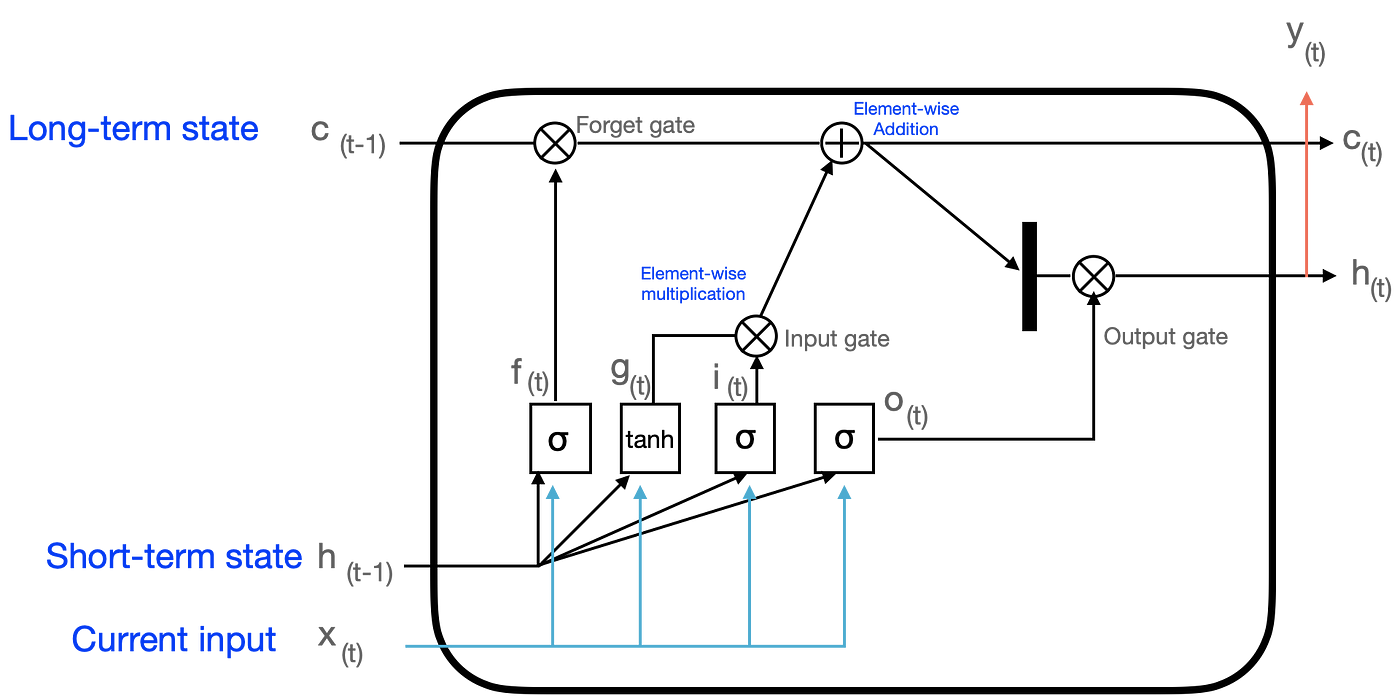

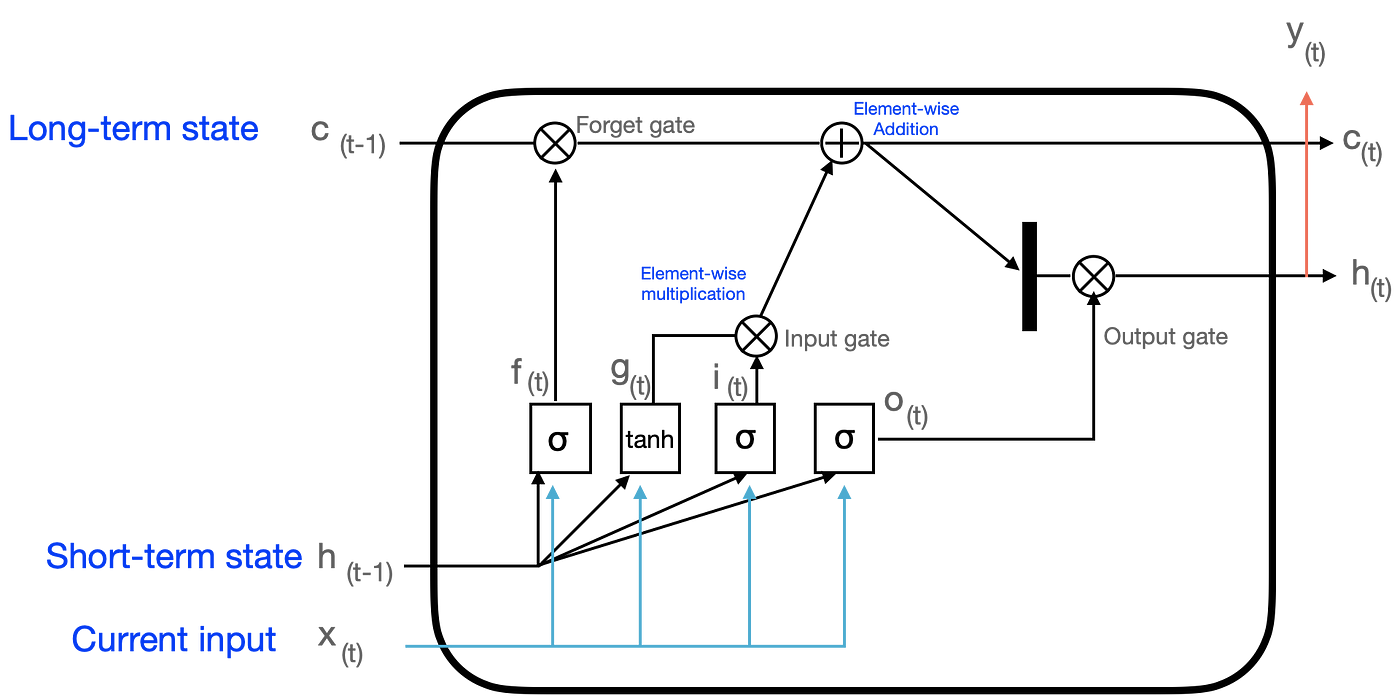

Recurrent Neural Networks Rnn And Long Short Term Memory Lstm Youtube Fun Science Science And Technology How To Memorize Things. End to End Memory Networks Explained. Memory network trained end-to-end Weakly supervised Solution extends to sentence generation Performs better than previous comparable RNNs and LSTMs.

The motivation is that many neural networks lack a long-term memory component and their existing memory component encoded by states and weights is too small and not compartmentalized enough to accurately remember facts. For many organizations a major question faced by data scientists and engineers is how best to go from the experimentation stage to production. Gru Recurrent Neural Networks A Smart Way To Predict Sequences In Python By Saul Dobilas Feb 2022 Towards Data S In 2022 Weather Data Data Science Predictions.

At the top of the network the input to W also combines the input and the output of the top memory layer. We introduce a neural network with a recurrent attention model over a possibly large external memory. We introduce a neural network with a recurrent attention model over a possibly large external memory.

A Full List of Part-of-Speech of Word in Chinese Jieba Tool NLP Tutorial. The architecture is a form of Memory Network but unlike the model in that work it is trained end-to-end and hence requires significantly less supervision during training making it more generally applicable in realistic. We map each input x into its corresponding cluster and perform inference within that cluster space only instead of the whole memory space.

Best Practice to Python Clip Big Video to Small Video by Start Time and End Time Python Tutorial. The proposed model has input memory or key vectors representing all inputs a query vector to which the model needs to respond to like the last decoder hidden state and value or output memory. Extension of RNNSearch and can perform multiple hops computational steps over the memory per symbol.

Our model takes a discrete set of inputs x1xn that are to be stored in the memory a query q and. A neural network with a recurrent attention model over a possibly large external memory that is trained end-to-end and hence requires significantly less supervision during training making it more generally applicable in realistic settings.

A Gentle Introduction To Long Short Term Memory Networks By The Experts

Http Dellemcstudy Blogspot Com 2020 09 Discover Faster Easier Multi Tenant Cloud Deployments Html Foundation Engineering Clouds Cloud Services

Lstm And Bidirectional Lstm For Regression By Mohammed Alhamid Towards Data Science

A Gentle Introduction To Long Short Term Memory Networks By The Experts

State Of The Art Multilingual Lemmatization Data Science Nlp Machine Learning

Back Propagation Derivation For Feed Forward Artificial Neural Networks Youtube Artificial Neural Network Basic Programming Machine Learning

Testing Strategies In A Microservice Architecture Testing Strategies Software Architecture Diagram Agile Software Development

How Computer Memory Works Computer Memory Virtual Memory Computer Basics

Pin On Superheroes Y Villanos Dc 2

Ai In Banking And Payments How Artificial Intelligenc Artificial Intelligence News Artificial Intelligence Technology Machine Learning Artificial Intelligence

Network Automation With Ansible Automation Networking Digital Transformation

Let The Old Year End And The New Year Begin With The Warmest Of Aspirations Happy New Year Happy New Happy New Year Happy

Computer Architecture Computer Basics Computer Cpu

Everything You Need To Know About Virtual Memory Virtual Memory Memories Time Complexity

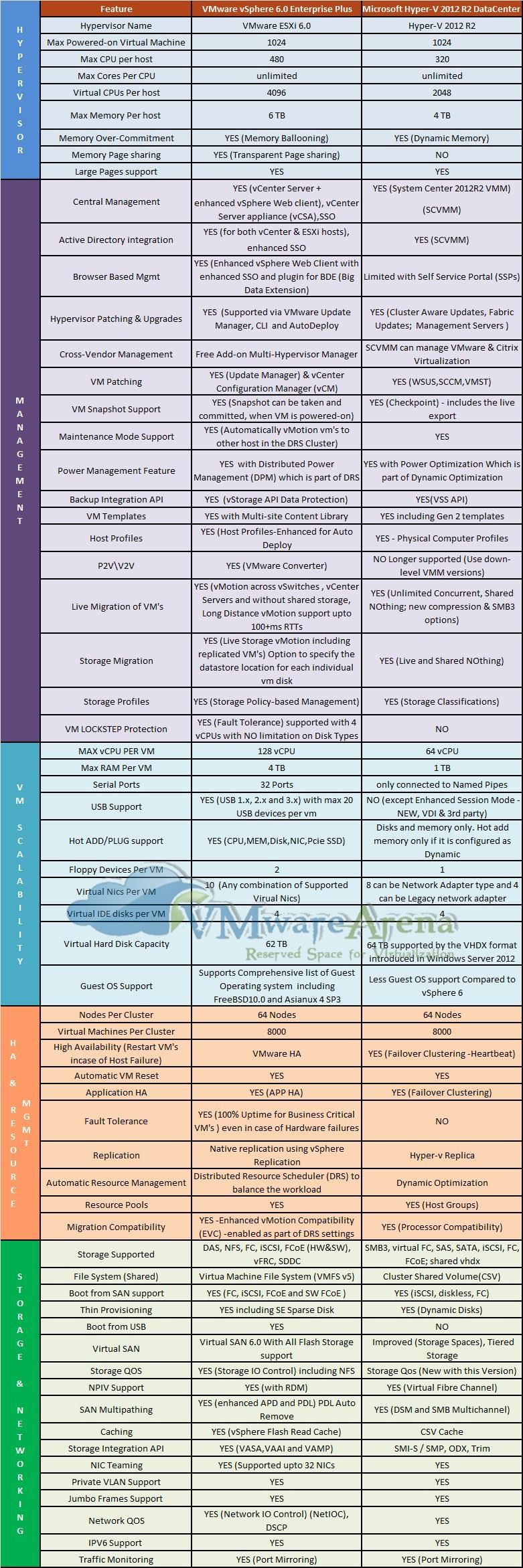

Vmware Vs Hyper V Virtualization Battle Data Science Learning Computer Science Engineering Internet Technology

Gru Recurrent Neural Networks A Smart Way To Predict Sequences In Python By Saul Dobilas Feb 2022 Towards Data S In 2022 Weather Data Data Science Predictions

What Is The Sap Software System Change Management Organizational Management Sap

The Weight And Sum Operation For A Neural Network Is A Dot Product The Walsh Hadamard Transform Is A Collection Of Dot Pro Words Word Search Puzzle Networking

Comments

Post a Comment